Supercomputers and GPU computing.

Online configurator of supercomputers and GPU servers.

GPU computing

GPU-accelerated computing offers unprecedented application performance because the GPU processes the computationally intensive parts of the application, while the rest of the application runs on the CPU. From a user perspective, the application simply runs significantly faster.

A simple way to understand the difference between a CPU and a GPU is to compare how they perform tasks. A CPU consists of a few cores optimized for sequential processing of data, while a GPU consists of thousands of smaller, more energy-efficient cores designed to handle multiple tasks simultaneously.

HPC computing is a fundamental pillar of modern science. From weather forecasting to drug discovery to energy exploration, scientists constantly use large computing systems to model and predict our world. AI is empowering HPC, allowing scientists to analyze large amounts of data and extract useful information where simulations alone cannot provide the full picture.

NVIDIA A100 and H100 GPUs are designed to bring HPC and AI together. They are an HPC solution that excels at both computing to run simulations and processing data to extract useful information.

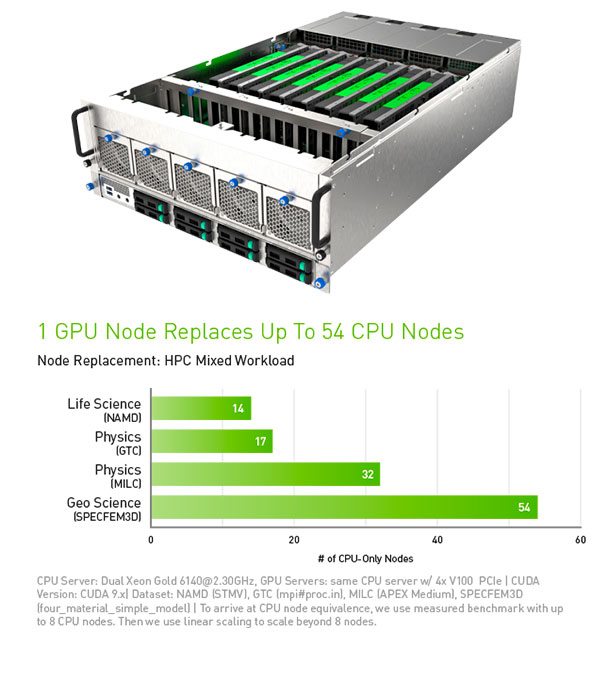

By combining CUDA and Tensor cores into a single architecture, a server equipped with NVIDIA A100 and H100 GPUs can replace hundreds of traditional CPU servers, performing traditional HPC and AI workloads. Now every scientist can afford a supercomputer that will help solve the most complex problems.

HIGHEST PERFORMANCE

![Turing-Tensor-Core_30fps_FINAL_736x414[1].gif Turing-Tensor-Core_30fps_FINAL_736x414[1].gif](../../upload/medialibrary/29e/29e84edaec3e44e8c8f3b058ab2d44dd.gif)

Application performance is more than just flops. Computing professionals rely on serious applications to dramatically accelerate scientific discovery and data analytics. Their work starts with the world’s fastest accelerators and includes a robust infrastructure, the ability to monitor and manage that infrastructure, and the ability to quickly reroute data when needed. The compute platform accelerated by NVIDIA A100 and H100 GPUs delivers all of this to deliver unmatched application performance for science, analytics, engineering, consumer, and enterprise applications.

Featuring fourth-generation Tensor Cores and the Transformer Engine with FP8 precision, NVIDIA H100 GPUs deliver up to 9X faster training than the previous generation for Models of Experts (MoE). The combination of fourth-generation NVlink, which delivers 900 gigabytes per second (GB/s) of GPU-to-GPU connectivity, NVSwitch, which accelerates the collective communication of each GPU between nodes, PCIe Gen 5, and NVIDIA Magnum IO™ software enables seamless scalability for small businesses and large unified GPU clusters.

ACCELERATE YOUR WORKLOADS WITH DATA CENTER SOLUTIONS

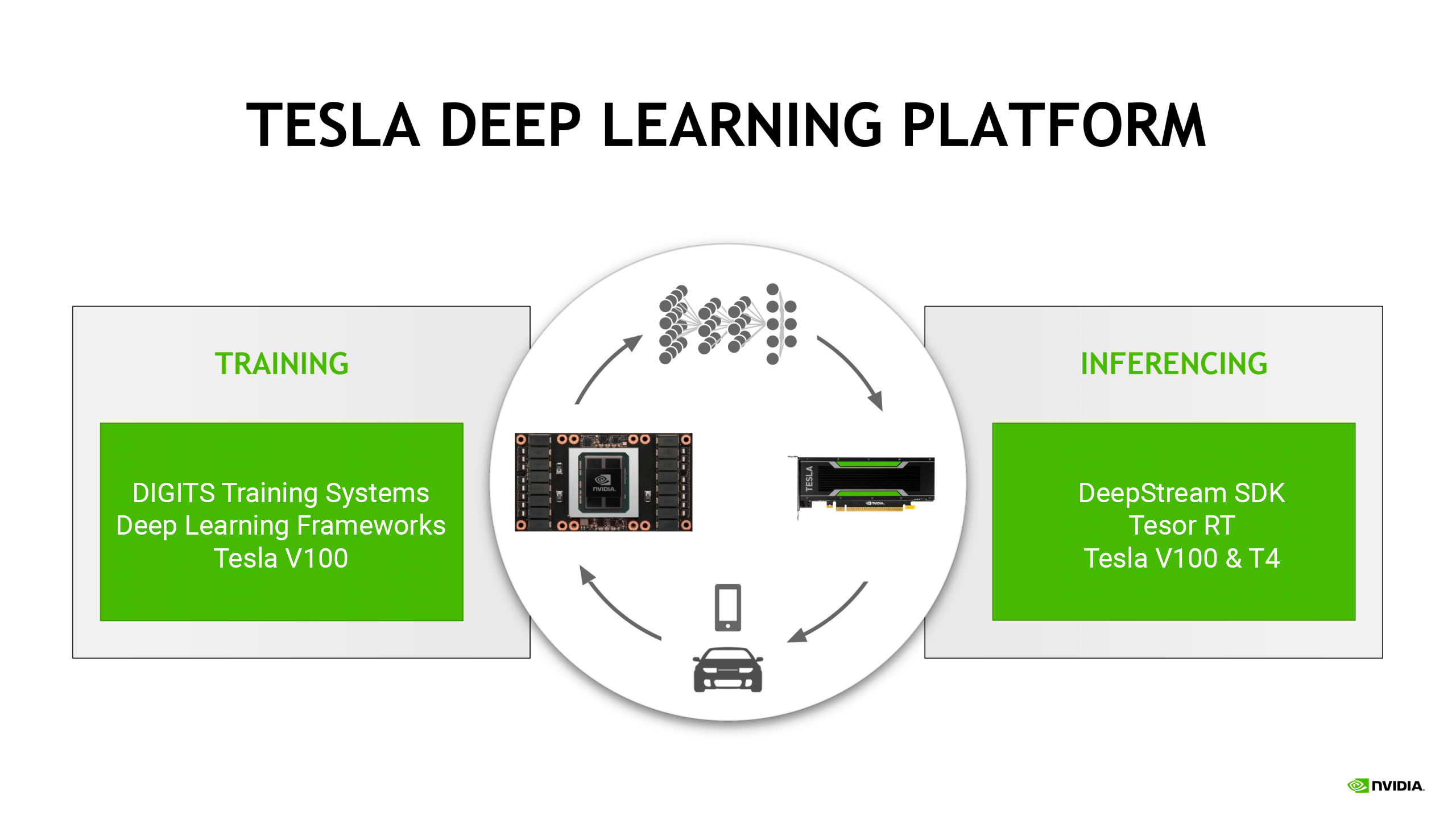

Accelerating the training of increasingly complex neural network models is key to increasing the productivity of data scientists and creating AI-based services faster. Tesla V100-based servers leverage the performance of the new NVIDIA Volta™ architecture and reduce the training time of deep learning algorithms to just a few hours.

FORSITE HPC-1000 Supercomputer

Universal hybrid server for AI/DL/ML tasks, allows you to install up to 4 NVIDIA graphics accelerators.

Features:

- 4x NVIDIA NVIDIA A100/RTX A6000 support

- 2x Intel Xeon Scalable/ 2 x AMD EPYC support

- up to 4TB DDR4 3200MHz ECC/REG support

- 1U GPU server

FORSITE HPC 4029 AI SXM NVlink supercomputer

Hybrid graphics server for solving AI/DL tasks. 8 x NVIDIA A100 accelerators united by a high-speed NVLINK interface.

Features:

- 8x NVIDIA NVIDIA A100-80GB SXM2 support

- 2x Intel Xeon Scalable / 2 x AMD EPYC support

- up to 8TB 3DS ECC DDR4-3200MHz support

- 4U GPU server

FORSITE HPC-6049 AI supercomputer

High-density solution for inference tasks, 2x Intel Xeon Scalable with the ability to install up to 20 T4/A2 graphics accelerators.

Features:

- 20x NVIDIA TESLA T4/A2 support

- 2x Intel Xeon Scalable support

- up to 3TB DDR4 2933MHz ECC/REG support

- 4U GPU server

Inference is a task that requires trained neural networks. Since new data comes into the system in the form of images, speech, image search queries, it is inference that allows you to find answers and make recommendations, which is the basis of most artificial intelligence services. A server equipped with one NVIDIA GPU provides 27 times higher performance in inference tasks compared to a CPU-based server, which leads to a significant reduction in data center infrastructure costs.